Deploying DeepSeek R1 Distill Series Models on RTX 4090 with Ollama and Optimization

Introduction

Recently, DeepSeek-R1 has gained significant attention due to its affordability and powerful performance. Additionally, the official release of several distilled models in various sizes makes it possible for consumer-grade hardware to experience the capabilities of reasoning models.

- deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

- deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

- deepseek-ai/DeepSeek-R1-Distill-Llama-8B

- deepseek-ai/DeepSeek-R1-Distill-Qwen-14B

- deepseek-ai/DeepSeek-R1-Distill-Qwen-32B

- deepseek-ai/DeepSeek-R1-Distill-Llama-70B

However, it is important to note that these distilled models are far from the full DeepSeek-R1 model. For instance, DeepSeek-R1-Distill-Qwen-32B only reaches the level of o1-mini.

This can be seen in the official chart (the chart below is interactive and you can turn off data that you do not want to see).

Ollama provides a convenient interface and tools for using and managing models, with the backend being llama.cpp. It supports both CPU and GPU inference optimization.

Get up and running with OpenAI gpt-oss, DeepSeek-R1, Gemma 3 and other models.

Installation of Ollama

Follow the instructions on Download Ollama to complete the installation. My environment is as follows:

- Operating system: Windows 11

- GPU: NVIDIA RTX 4090

- CPU: Intel 13900K

- Memory: 128G DDR5

Creating Models

After installing Ollama, we need to create models. One way is to pull from the Ollama Library.

|

|

However, the default context length of this pulled model is 4096. This is insufficient and unreasonable, so we need to modify it.

One way is to directly edit the Modelfile. If you do not know where a model’s Modelfile is located, execute the following command to view its Modelfile.

|

|

Here I provide my used Modelfile, which can be saved in a new text file, for example DeepSeek-R1-Distill-Qwen-32B-Q4_K_M.txt.

|

|

It contains several parts, and we only need to modify the FROM statement (indicating which model is used for construction) and the value of num_ctx (default 4096 unless set otherwise through API requests). Here I set it to 16000, which represents the context length. The longer the context, the more memory and computational resources are consumed.

Note

After testing, RTX 4090 can run a 32B q4_K_M quantized model with KV Cache quantified as q8_0 and Flash Attention enabled while maintaining a context length of 16K. If running the same configuration for a 14B q4_K_M quantized model, it can achieve a context length of 64K. I will explain more about KV Cache quantization and Flash Attention later.

After creating the Modelfile, we can create the model using the following command:

|

|

Tip

The format is as follows:

ollama create <name of the model to be created> -f <path and name of Modelfile>

During this process, Ollama will pull the model and create it. After completion, you can execute ollama list to check the model list, and you should see something similar.

|

|

Optimization

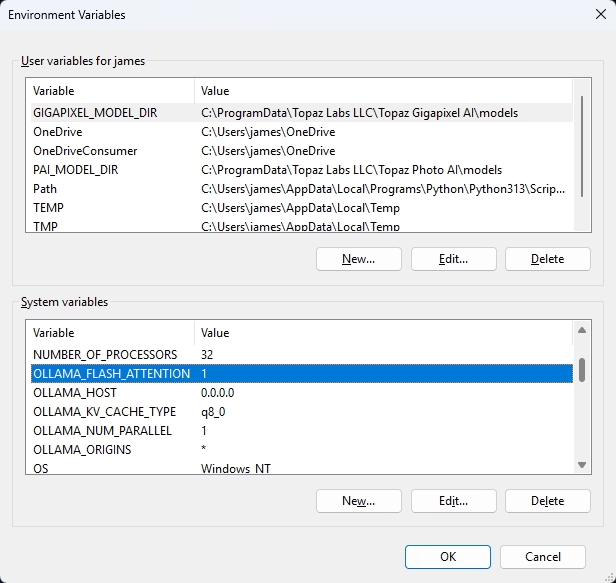

Ollama supports multiple optimization parameters controlled by environment variables.

OLLAMA_FLASH_ATTENTION: Set to1to enable, and0to disable.OLLAMA_HOST: IP address Ollama listens on. Default is127.0.0.1, change it to0.0.0.0if you want to serve externally.OLLAMA_KV_CACHE_TYPE: Set toq8_0orq4_0. The default value isfp16.OLLAMA_NUM_PARALLEL: Number of parallel requests, more throughput but higher memory consumption. Generally set to1.OLLAMA_ORIGINS: CORS cross-origin request settings.

Flash Attention must be enabled. I recommend setting OLLAMA_KV_CACHE_TYPE to q8_0. In my tests, q4_0 reduces the reasoning length of R1, possibly because longer content and context are more important.

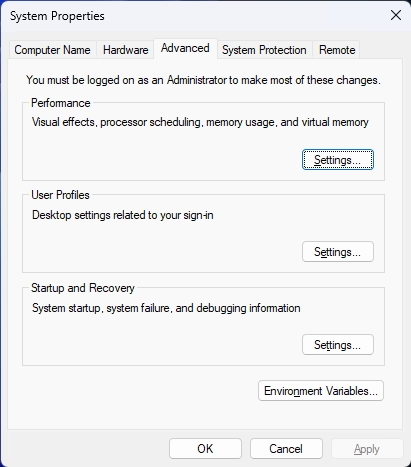

Windows 11

To set environment variables on Windows 11, go to “Advanced System Settings,” then choose “Environment Variables.”

After that, select “New” to add a new variable. Restart Ollama for changes to take effect.

MacOS

On MacOS, you can use commands like the following:

|

|

Restart Ollama after setting environment variables.

Linux

In Linux, modify ollama.service file to change its environment variables after installing Ollama:

|

|

Then add the Environment field under [Service], like this:

|

|

Save and reload changes:

|

|

Limitations

The backend llama.cpp used by Ollama is not designed for high-concurrency and high-performance production environments. For example, its support for multi-GPU is suboptimal; it splits model layers across multiple GPUs to solve memory issues but only one GPU works at a time. To utilize the performance of multiple GPUs simultaneously, tensor parallelism is required, which SGLang or vLLM are better suited for.

In terms of performance, Ollama does not match SGLang or vLLM in throughput and multi-modal model support is limited with slow adaptation progress.

Clients

For easier use of models within Ollama, I recommend two clients. Cherry Studio is a local client that I find useful, while LobeChat is a cloud-based client (I previously wrote an article on deploying the database version of LobeChat using Docker Compose).

🍒 Cherry Studio is a desktop client that supports for multiple LLM providers.

🤯 Lobe Chat - an open-source, modern design AI chat framework. Supports multiple AI providers (OpenAI / Claude 4 / Gemini / DeepSeek / Ollama / Qwen), Knowledge Base (file upload / RAG ), one click install MCP Marketplace and Artifacts / Thinking. One-click FREE deployment of your private AI Agent application.

AI模型聚合管理分发系统,支持将多种大模型转为统一格式调用,支持OpenAI、Claude、Gemini等格式,可供个人或者企业内部管理与分发渠道使用,本项目基于One API二次开发。🍥 The next-generation LLM gateway and AI asset management system supports multiple languages.

沉浸式双语网页翻译扩展 , 支持输入框翻译, 鼠标悬停翻译, PDF, Epub, 字幕文件, TXT 文件翻译 - Immersive Dual Web Page Translation Extension

New API is a tool that I find useful for managing APIs and providing services in the OpenAI API format. Immersive Translate is another highly-rated translation plugin that supports calling OpenAI API for translations, which can also be combined with Ollama and New API. Its translation quality far exceeds traditional methods.

Related Content

- Choice an Ideal Quantization Type for Llama.cpp

- Fix the Issue of Flarum Emails Not Being Sent Due to Queue.

- Use Docker Compose to Deploy the LobeChat Server Database Version

- Claude 3 Opus's Performance in C Language Exam

- Migrate the Docker-Deployed Umami From One Server to Another.